# Introduction

URL:: https://kafka.apache.org/intro

Author:: Apache Kafka

## Highlights

> Event streaming is the digital equivalent of the human body's central nervous system. It is the technological foundation for the 'always-on' world where businesses are increasingly software-defined and automated, and where the user of software is more software. ([View Highlight](https://read.readwise.io/read/01gcep2sk4g51kf2dcddg1m91z))

> Technically speaking, event streaming is the practice of capturing data in real-time from event sources like databases, sensors, mobile devices, cloud services, and software applications in the form of streams of events; storing these event streams durably for later retrieval; manipulating, processing, and reacting to the event streams in real-time as well as retrospectively; and routing the event streams to different destination technologies as needed. Event streaming thus ensures a continuous flow and interpretation of data so that the right information is at the right place, at the right time. ([View Highlight](https://read.readwise.io/read/01gcep2ypkm4gc27sda3nc2tt8))

> Kafka combines three key capabilities so you can implement [your use cases](https://kafka.apache.org/powered-by) for event streaming end-to-end with a single battle-tested solution:

> 1. To **publish** (write) and **subscribe to** (read) streams of events, including continuous import/export of your data from other systems.

> 2. To **store** streams of events durably and reliably for as long as you want.

> 3. To **process** streams of events as they occur or retrospectively. ([View Highlight](https://read.readwise.io/read/01gcep3ne93pw0nzgffwsenj02))

> **Servers**: Kafka is run as a cluster of one or more servers that can span multiple datacenters or cloud regions. Some of these servers form the storage layer, called the brokers. Other servers run [Kafka Connect](https://kafka.apache.org/documentation/#connect) to continuously import and export data as event streams to integrate Kafka with your existing systems such as relational databases as well as other Kafka clusters. To let you implement mission-critical use cases, a Kafka cluster is highly scalable and fault-tolerant: if any of its servers fails, the other servers will take over their work to ensure continuous operations without any data loss. ([View Highlight](https://read.readwise.io/read/01gch0sndpq81zxxfmrny1yj60))

> **Clients**: They allow you to write distributed applications and microservices that read, write, and process streams of events in parallel, at scale, and in a fault-tolerant manner even in the case of network problems or machine failures. Kafka ships with some such clients included ([View Highlight](https://read.readwise.io/read/01gch0tda6e0szqn58s08x1wsj))

> An **event** records the fact that "something happened" in the world or in your business. It is also called record or message in the documentation. ([View Highlight](https://read.readwise.io/read/01gch0th0mv9j54wdcx1rbyn4a))

> **Producers** are those client applications that publish (write) events to Kafka, and **consumers** are those that subscribe to (read and process) these events. ([View Highlight](https://read.readwise.io/read/01gch0tnnsstwsbzx8pz0d5252))

> Events are organized and durably stored in **topics**. Very simplified, a topic is similar to a folder in a filesystem, and the events are the files in that folder. ([View Highlight](https://read.readwise.io/read/01gch0ttf6bk4ax221wswcfhba))

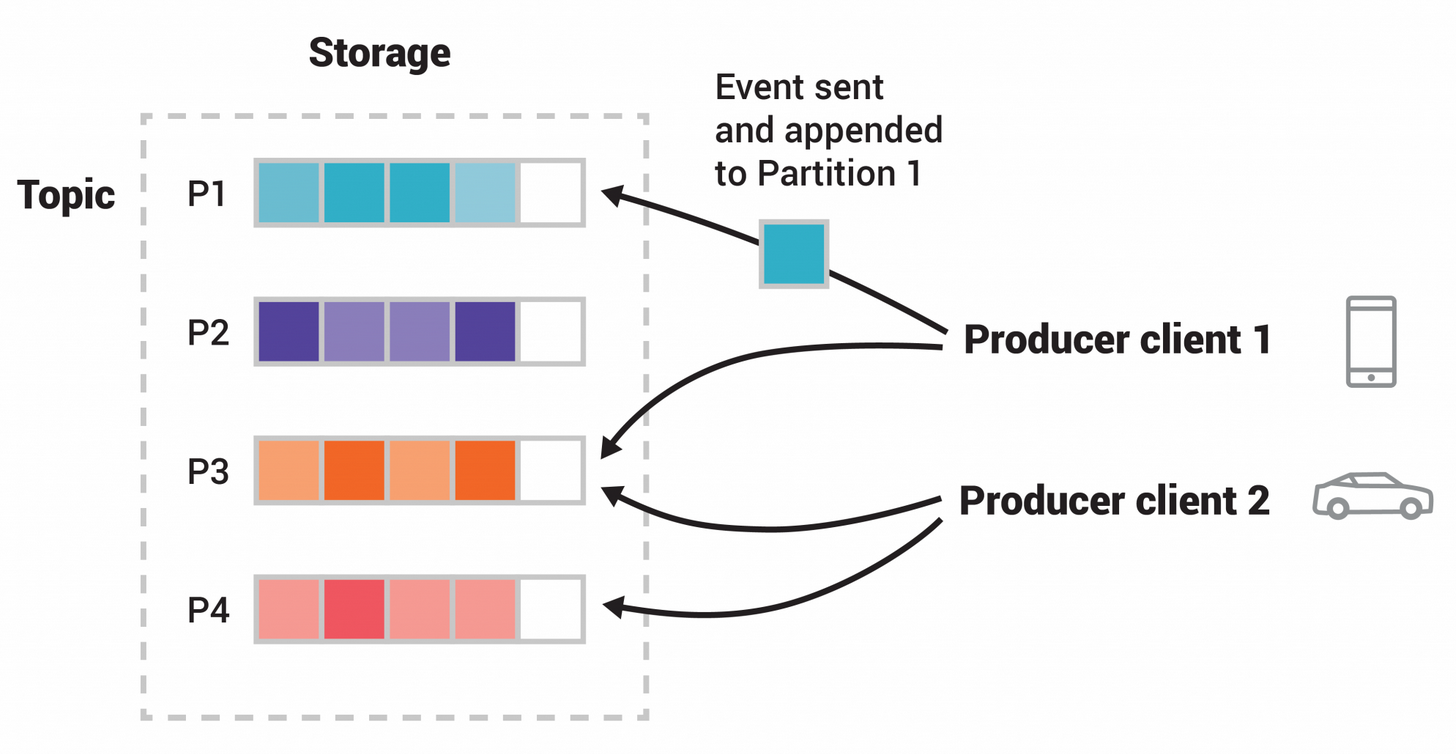

> Topics are **partitioned**, meaning a topic is spread over a number of "buckets" located on different Kafka brokers. This distributed placement of your data is very important for scalability because it allows client applications to both read and write the data from/to many brokers at the same time. ([View Highlight](https://read.readwise.io/read/01gch0v3b6wvahp30dwegdqy7b))

>  ([View Highlight](https://read.readwise.io/read/01gch0vckhj4axwezv7achdn7t))

> Figure: This example topic has four partitions P1–P4. Two different producer clients are publishing, independently from each other, new events to the topic by writing events over the network to the topic's partitions. Events with the same key (denoted by their color in the figure) are written to the same partition. Note that both producers can write to the same partition if appropriate. ([View Highlight](https://read.readwise.io/read/01gch0vka8y00vt6je946nbcrb))

---

Title: Introduction

Author: Apache Kafka

Tags: readwise, articles

date: 2024-01-30

---

# Introduction

URL:: https://kafka.apache.org/intro

Author:: Apache Kafka

## AI-Generated Summary

Apache Kafka: A Distributed Streaming Platform.

## Highlights

> Event streaming is the digital equivalent of the human body's central nervous system. It is the technological foundation for the 'always-on' world where businesses are increasingly software-defined and automated, and where the user of software is more software. ([View Highlight](https://read.readwise.io/read/01gcep2sk4g51kf2dcddg1m91z))

> Technically speaking, event streaming is the practice of capturing data in real-time from event sources like databases, sensors, mobile devices, cloud services, and software applications in the form of streams of events; storing these event streams durably for later retrieval; manipulating, processing, and reacting to the event streams in real-time as well as retrospectively; and routing the event streams to different destination technologies as needed. Event streaming thus ensures a continuous flow and interpretation of data so that the right information is at the right place, at the right time. ([View Highlight](https://read.readwise.io/read/01gcep2ypkm4gc27sda3nc2tt8))

> Kafka combines three key capabilities so you can implement [your use cases](https://kafka.apache.org/powered-by) for event streaming end-to-end with a single battle-tested solution:

> 1. To **publish** (write) and **subscribe to** (read) streams of events, including continuous import/export of your data from other systems.

> 2. To **store** streams of events durably and reliably for as long as you want.

> 3. To **process** streams of events as they occur or retrospectively. ([View Highlight](https://read.readwise.io/read/01gcep3ne93pw0nzgffwsenj02))

> **Servers**: Kafka is run as a cluster of one or more servers that can span multiple datacenters or cloud regions. Some of these servers form the storage layer, called the brokers. Other servers run [Kafka Connect](https://kafka.apache.org/documentation/#connect) to continuously import and export data as event streams to integrate Kafka with your existing systems such as relational databases as well as other Kafka clusters. To let you implement mission-critical use cases, a Kafka cluster is highly scalable and fault-tolerant: if any of its servers fails, the other servers will take over their work to ensure continuous operations without any data loss. ([View Highlight](https://read.readwise.io/read/01gch0sndpq81zxxfmrny1yj60))

> **Clients**: They allow you to write distributed applications and microservices that read, write, and process streams of events in parallel, at scale, and in a fault-tolerant manner even in the case of network problems or machine failures. Kafka ships with some such clients included ([View Highlight](https://read.readwise.io/read/01gch0tda6e0szqn58s08x1wsj))

> An **event** records the fact that "something happened" in the world or in your business. It is also called record or message in the documentation. ([View Highlight](https://read.readwise.io/read/01gch0th0mv9j54wdcx1rbyn4a))

> **Producers** are those client applications that publish (write) events to Kafka, and **consumers** are those that subscribe to (read and process) these events. ([View Highlight](https://read.readwise.io/read/01gch0tnnsstwsbzx8pz0d5252))

> Events are organized and durably stored in **topics**. Very simplified, a topic is similar to a folder in a filesystem, and the events are the files in that folder. ([View Highlight](https://read.readwise.io/read/01gch0ttf6bk4ax221wswcfhba))

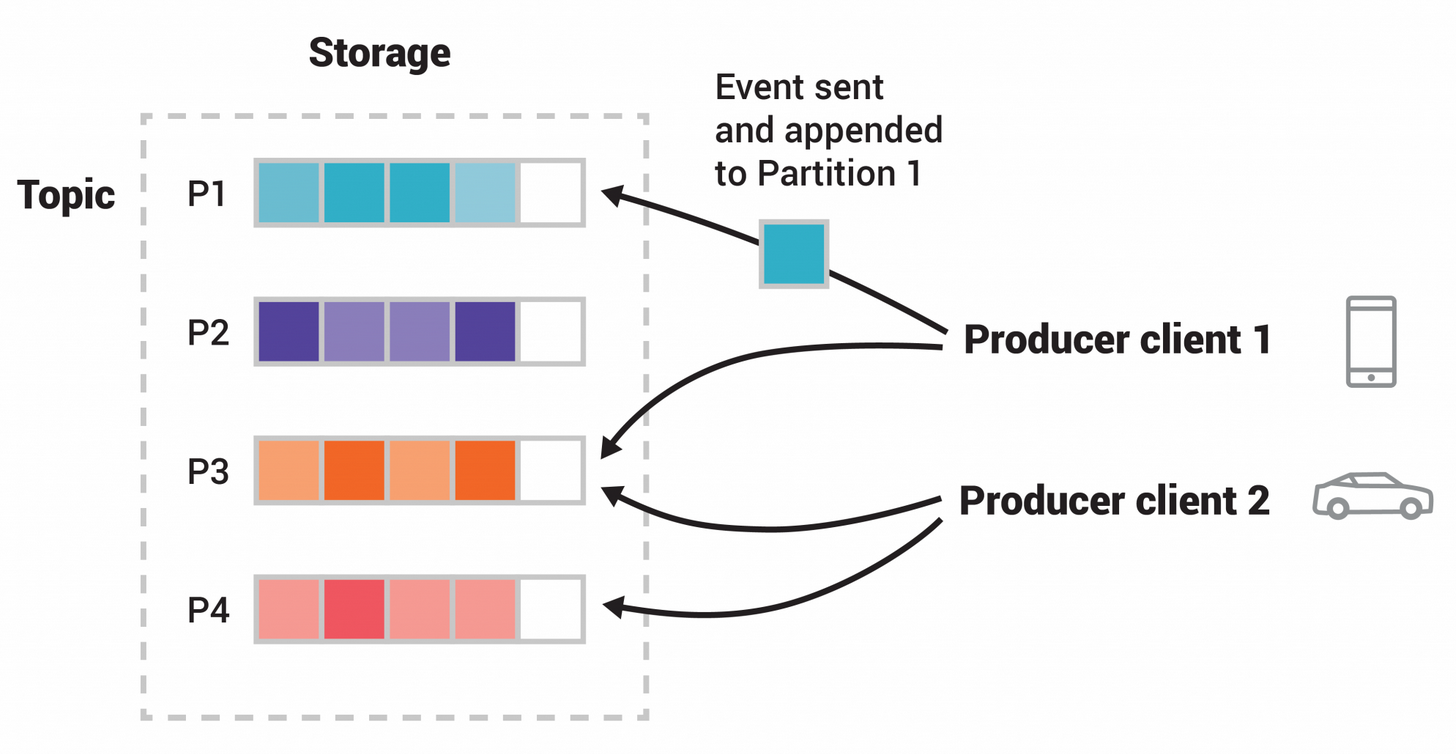

> Topics are **partitioned**, meaning a topic is spread over a number of "buckets" located on different Kafka brokers. This distributed placement of your data is very important for scalability because it allows client applications to both read and write the data from/to many brokers at the same time. ([View Highlight](https://read.readwise.io/read/01gch0v3b6wvahp30dwegdqy7b))

>  ([View Highlight](https://read.readwise.io/read/01gch0vckhj4axwezv7achdn7t))

> Figure: This example topic has four partitions P1–P4. Two different producer clients are publishing, independently from each other, new events to the topic by writing events over the network to the topic's partitions. Events with the same key (denoted by their color in the figure) are written to the same partition. Note that both producers can write to the same partition if appropriate. ([View Highlight](https://read.readwise.io/read/01gch0vka8y00vt6je946nbcrb))