# On Coordinated Omission

URL:: https://www.scylladb.com/2021/04/22/on-coordinated-omission/

Author:: Ivan Prisyazhynyy

## Highlights

> Daniel Compton in Artillery [#721](https://github.com/artilleryio/artillery/issues/721). ([View Highlight](https://read.readwise.io/read/01ghbbv0zxwkap0kypg21epndx))

> *Coordinated omission is a term [coined by Gil Tene](https://qconsf.com/sf2012/dl/qcon-sanfran-2012/slides/GilTene_HowNotToMeasureLatency.pdf) to describe the phenomenon when the measuring system inadvertently coordinates with the system being measured in a way that avoids measuring outliers.*

> To that, I would add: *…and misses sending requests.* ([View Highlight](https://read.readwise.io/read/01ghbbtvs37ptb419d2v6z4gve))

> CO is a concern because if your benchmark or a measuring system is suffering from it, then the results will be rendered useless or at least will look a lot more positive than they actually are. ([View Highlight](https://read.readwise.io/read/01ghbbvecv6x0mhe9zgpd2e8q3))

> For our purposes, there are basically two kinds of systems: open model and closed model. This [2006 research paper](https://www.usenix.org/legacy/event/nsdi06/tech/full_papers/schroeder/schroeder.pdf) gives a good breakdown of the differences between the two. For us, the important feature of *open-model systems* is that new requests arrive independent of how quickly the system processes requests. By contrast, in *closed-model systems* new job arrivals are triggered only by job completions. ([View Highlight](https://read.readwise.io/read/01ghbbwny14123crb2d24mbk24))

> So now we have two problems: generating the load for the benchmark and measuring the resulting latency. This is where the problem of Coordinated Omission comes into play. ([View Highlight](https://read.readwise.io/read/01ghbc6bx62vmvz1ry5dw5zzqc))

> The problem comes when X reaches a certain size. ([View Highlight](https://read.readwise.io/read/01ghbc7e970z1184s3yzfaqm30))

> So, what to do? The answer is obvious: let’s stop creating threads. We can preallocate them and send requests like this: ([View Highlight](https://read.readwise.io/read/01ghbc87ygscfh0ye18b5c0whc))

> But now we have a new problem.

> We meant to simulate a workload for an open-model system but wound up with a closed-model system instead. ([View Highlight](https://read.readwise.io/read/01ghbc8bhwck25bgz4yngz4323))

> What’s the problem with that? The requests are sent *synchronously* and *sequentially*. That means that every worker-thread may send only some bounded number of requests in a unit of time. This is the key issue: the worker performance depends on how fast the target system processes its requests. ([View Highlight](https://read.readwise.io/read/01ghbc8rb3agw3kfnm2krjgmxr))

> There are two ways to implement a worker schedule: *static* and *dynamic*. A static schedule is a function of the start timestamp; the firing points don’t move. A *dynamic schedule* is one where the next firing starts after the last request has completed but not before the minimum time delay between requests. ([View Highlight](https://read.readwise.io/read/01ghbc9hy9gz956ngbfpkxh7wj))

> A key characteristic of a schedule is how it processes outliers—moments when the worker can’t keep up with the assigned plan, and the target system’s throughput appears to be lower than the loader’s throughput.

> This is exactly the first part of the Coordinated Omission problem. There are two approaches to outliers: Queuing and Queueless. ([View Highlight](https://read.readwise.io/read/01ghbcgq7b10q4h3yra52b32w1))

> A good queueing implementation tracks requests that have to be fired and tries to fire them as soon as it can to get the schedule back on track. A not-so-good implementation shifts the entire schedule by pushing delayed requests into the next trigger point.

> By contrast, a Queueless implementation simply drops missed requests. ([View Highlight](https://read.readwise.io/read/01ghbcgwwadsz4z2t5yvbzdaex))

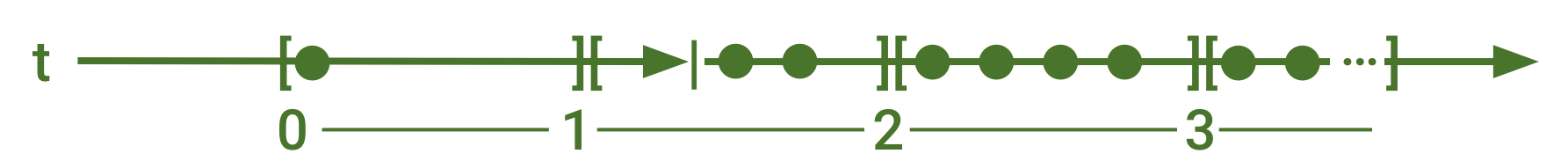

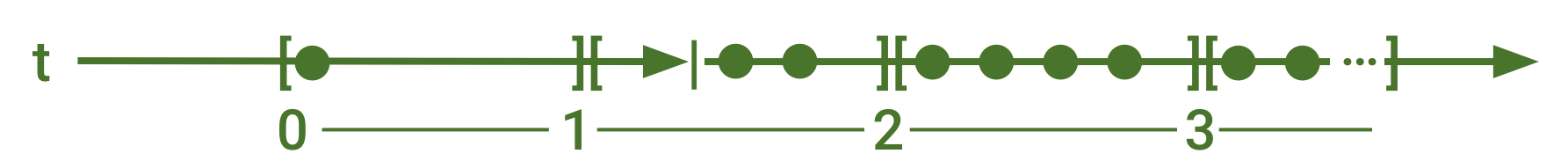

> As the name suggests, a *Queueless* approach to scheduling doesn’t queue requests. It simply skips ones that aren’t sent on the proper schedule.

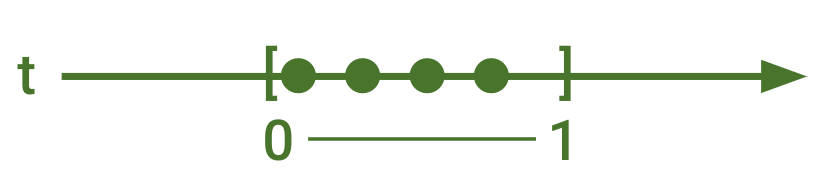

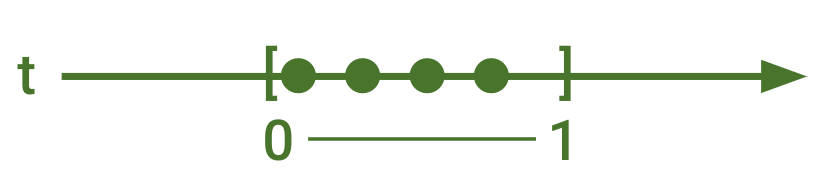

> *Our worker’s schedule: 250ms per request*

>

> *Queueless implementation that ignores requests that it did not send*

>

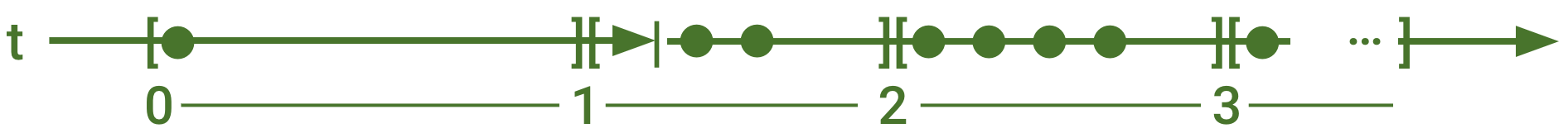

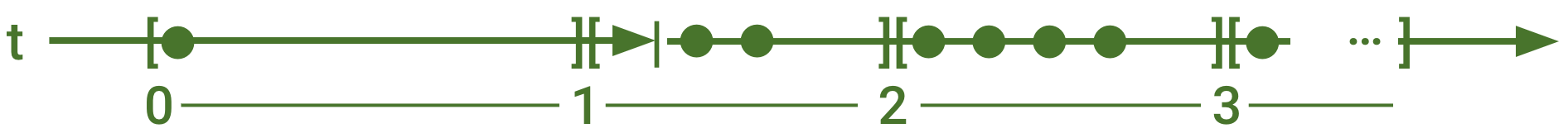

> *Queueless implementation that ignores schedule*

>  ([View Highlight](https://read.readwise.io/read/01ghbccyzv5n78rpdb8wscmdpr))

> Overall, there are two approaches for generating load with a closed-model loader to simulate an open-model system. If you can, use *Queuing because it does not omit generating requests according to the schedule* along with a static schedule because it will always converge your performance back to the expected plan. ([View Highlight](https://read.readwise.io/read/01ghbccfscc5n12h6dmfrygh46))

> Which brings us to the second part of the Coordinated Omission problem: what to do with latency outliers.

> Basically, we need to account for workers that send their requests late. So far as the simulated system is concerned, the request was fired in time, making latency look longer than it really was. We need to adjust the latency measurement to account for the delay in the request. ([View Highlight](https://read.readwise.io/read/01ghbcdbd0t2qhn9rkfkkhh6ap))

> *Request latency = (now() – intended_time) + service_time

> **

> where

> **

> service_time = end – start

> **intended_time(request) = start + (request – 1) * time_per_request*

> This is called latency correction. ([View Highlight](https://read.readwise.io/read/01ghbcdg30drpykanwbzkaygft))

---

Title: On Coordinated Omission

Author: Ivan Prisyazhynyy

Tags: readwise, articles

date: 2024-01-30

---

# On Coordinated Omission

URL:: https://www.scylladb.com/2021/04/22/on-coordinated-omission/

Author:: Ivan Prisyazhynyy

## AI-Generated Summary

What is coordinated omission? Why should you be wary if your benchmark results look too good? Plus how to correct for this in your testing.

## Highlights

> Daniel Compton in Artillery [#721](https://github.com/artilleryio/artillery/issues/721). ([View Highlight](https://read.readwise.io/read/01ghbbv0zxwkap0kypg21epndx))

> *Coordinated omission is a term [coined by Gil Tene](https://qconsf.com/sf2012/dl/qcon-sanfran-2012/slides/GilTene_HowNotToMeasureLatency.pdf) to describe the phenomenon when the measuring system inadvertently coordinates with the system being measured in a way that avoids measuring outliers.*

> To that, I would add: *…and misses sending requests.* ([View Highlight](https://read.readwise.io/read/01ghbbtvs37ptb419d2v6z4gve))

> CO is a concern because if your benchmark or a measuring system is suffering from it, then the results will be rendered useless or at least will look a lot more positive than they actually are. ([View Highlight](https://read.readwise.io/read/01ghbbvecv6x0mhe9zgpd2e8q3))

> For our purposes, there are basically two kinds of systems: open model and closed model. This [2006 research paper](https://www.usenix.org/legacy/event/nsdi06/tech/full_papers/schroeder/schroeder.pdf) gives a good breakdown of the differences between the two. For us, the important feature of *open-model systems* is that new requests arrive independent of how quickly the system processes requests. By contrast, in *closed-model systems* new job arrivals are triggered only by job completions. ([View Highlight](https://read.readwise.io/read/01ghbbwny14123crb2d24mbk24))

> So now we have two problems: generating the load for the benchmark and measuring the resulting latency. This is where the problem of Coordinated Omission comes into play. ([View Highlight](https://read.readwise.io/read/01ghbc6bx62vmvz1ry5dw5zzqc))

> The problem comes when X reaches a certain size. ([View Highlight](https://read.readwise.io/read/01ghbc7e970z1184s3yzfaqm30))

> So, what to do? The answer is obvious: let’s stop creating threads. We can preallocate them and send requests like this: ([View Highlight](https://read.readwise.io/read/01ghbc87ygscfh0ye18b5c0whc))

> But now we have a new problem.

> We meant to simulate a workload for an open-model system but wound up with a closed-model system instead. ([View Highlight](https://read.readwise.io/read/01ghbc8bhwck25bgz4yngz4323))

> What’s the problem with that? The requests are sent *synchronously* and *sequentially*. That means that every worker-thread may send only some bounded number of requests in a unit of time. This is the key issue: the worker performance depends on how fast the target system processes its requests. ([View Highlight](https://read.readwise.io/read/01ghbc8rb3agw3kfnm2krjgmxr))

> There are two ways to implement a worker schedule: *static* and *dynamic*. A static schedule is a function of the start timestamp; the firing points don’t move. A *dynamic schedule* is one where the next firing starts after the last request has completed but not before the minimum time delay between requests. ([View Highlight](https://read.readwise.io/read/01ghbc9hy9gz956ngbfpkxh7wj))

> A key characteristic of a schedule is how it processes outliers—moments when the worker can’t keep up with the assigned plan, and the target system’s throughput appears to be lower than the loader’s throughput.

> This is exactly the first part of the Coordinated Omission problem. There are two approaches to outliers: Queuing and Queueless. ([View Highlight](https://read.readwise.io/read/01ghbcgq7b10q4h3yra52b32w1))

> A good queueing implementation tracks requests that have to be fired and tries to fire them as soon as it can to get the schedule back on track. A not-so-good implementation shifts the entire schedule by pushing delayed requests into the next trigger point.

> By contrast, a Queueless implementation simply drops missed requests. ([View Highlight](https://read.readwise.io/read/01ghbcgwwadsz4z2t5yvbzdaex))

> As the name suggests, a *Queueless* approach to scheduling doesn’t queue requests. It simply skips ones that aren’t sent on the proper schedule.

> *Our worker’s schedule: 250ms per request*

>

> *Queueless implementation that ignores requests that it did not send*

>

> *Queueless implementation that ignores schedule*

>  ([View Highlight](https://read.readwise.io/read/01ghbccyzv5n78rpdb8wscmdpr))

> Overall, there are two approaches for generating load with a closed-model loader to simulate an open-model system. If you can, use *Queuing because it does not omit generating requests according to the schedule* along with a static schedule because it will always converge your performance back to the expected plan. ([View Highlight](https://read.readwise.io/read/01ghbccfscc5n12h6dmfrygh46))

> Which brings us to the second part of the Coordinated Omission problem: what to do with latency outliers.

> Basically, we need to account for workers that send their requests late. So far as the simulated system is concerned, the request was fired in time, making latency look longer than it really was. We need to adjust the latency measurement to account for the delay in the request. ([View Highlight](https://read.readwise.io/read/01ghbcdbd0t2qhn9rkfkkhh6ap))

> *Request latency = (now() – intended_time) + service_time

> **

> where

> **

> service_time = end – start

> **intended_time(request) = start + (request – 1) * time_per_request*

> This is called latency correction. ([View Highlight](https://read.readwise.io/read/01ghbcdg30drpykanwbzkaygft))